10kg Kōwhai

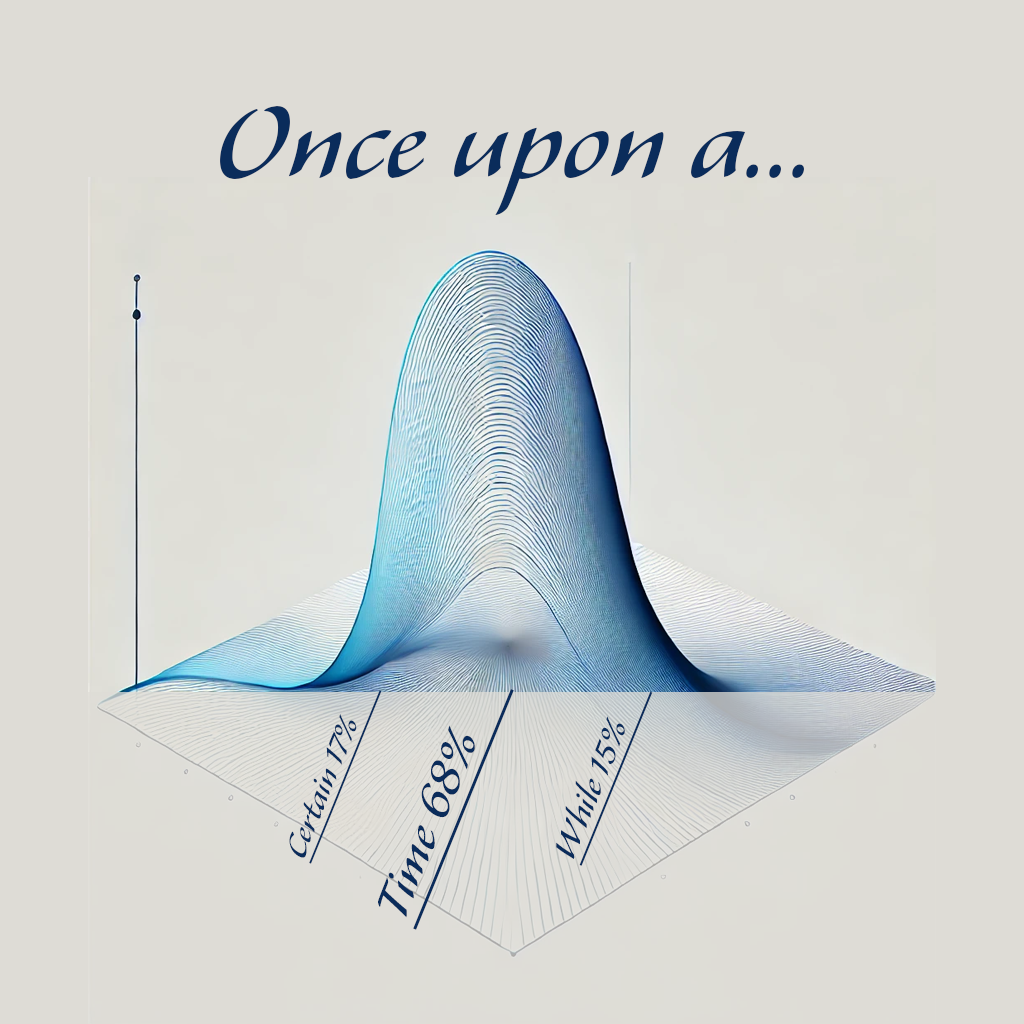

So with that quick primer out of the way the thing to know about cognitive load is that recall (e.g. Once upon a time) is cheap but tapping into these generalisable skills (e.g. “Use New Zealand English”), which are often called emergent capabilities, is expensive. Just to make things harder each task in a prompt is a combination of both recall and emergent capabilities with no visibility of the ratio or how overall “expensive” the task is.

This is where I think we can use the 10kg tree riddle from my previous blog post to help visualise the problem, to recap we started with this riddle from the ‘Easy problems LLMs get wrong’ dataset:

A 2kg tree grows in a planted pot with 10kg of soil. When the tree grows to 3kg, how much soil is left?

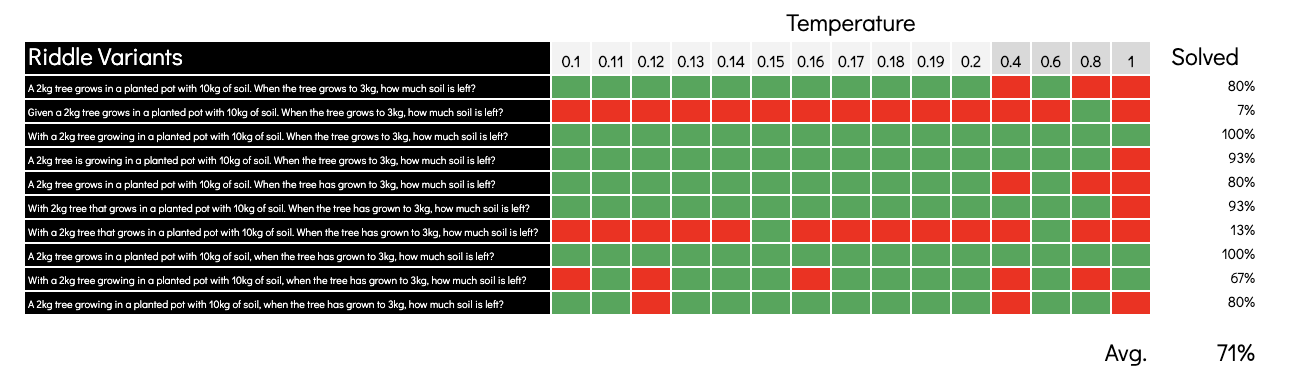

We can then “expand” that to 10 riddles by creating variants that only differ by a few tokens:

- A 2kg tree grows in a planted pot with 10kg of soil. When the tree grows to 3kg, how much soil is left?

- Given a 2kg tree grows in a planted pot with 10kg of soil. When the tree grows to 3kg, how much soil is left?

- With a 2kg tree growing in a planted pot with 10kg of soil. When the tree grows to 3kg, how much soil is left?

- A 2kg tree is growing in a planted pot with 10kg of soil. When the tree grows to 3kg, how much soil is left?

- A 2kg tree grows in a planted pot with 10kg of soil. When the tree has grown to 3kg, how much soil is left?

- With 2kg tree that grows in a planted pot with 10kg of soil. When the tree has grown to 3kg, how much soil is left?

- With a 2kg tree that grows in a planted pot with 10kg of soil. When the tree has grown to 3kg, how much soil is left?

- A 2kg tree grows in a planted pot with 10kg of soil, when the tree has grown to 3kg, how much soil is left?

- With a 2kg tree growing in a planted pot with 10kg of soil, when the tree has grown to 3kg, how much soil is left?

- A 2kg tree growing in a planted pot with 10kg of soil, when the tree has grown to 3kg, how much soil is left?

Why are we creating the variants? Because when you’re approaching the cognitive limit the impact of tokenization and embedding becomes more pronounced (i.e. the same things that trip up LLMs when counting how many R’s are in “Strawberry”).

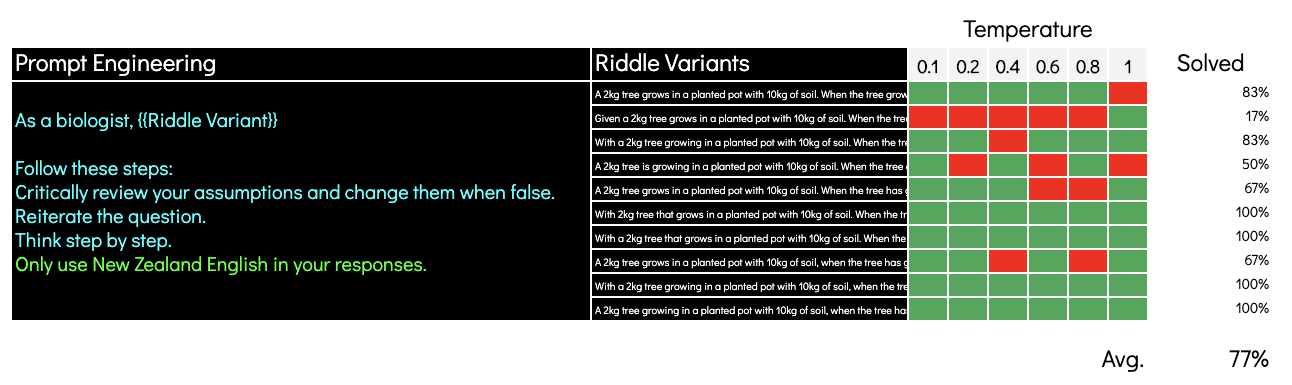

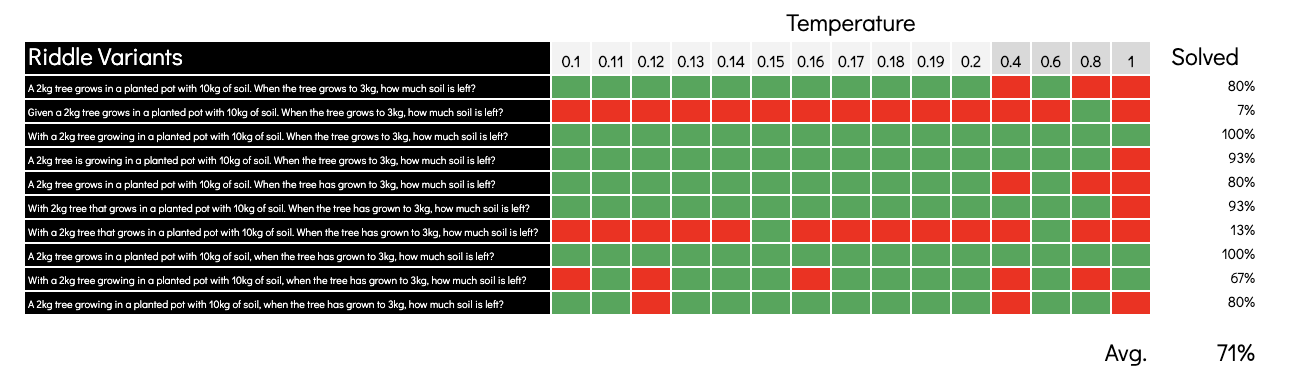

A prompt that’s successful may have close “neighbours” that are failing, you can also use temperature for even more visibility and here’s how that looks:

What jump out here:

- Tokenization/embedding impacts outcome more than temperature, a prompt that fails tends to continue to fail across a range of temperatures.

- The word “Given” doesn’t radically change the meaning of the riddle but has a large negative impact on the LLM’s ability to solve the riddle.

- The riddle that starts “With 2kg…” performs perfectly fine despite the weird grammar.

- I initially set this up with a temperature range of 0.1 to 0.2 but when I noticed this wasn’t performing as poorly as in my previous blog post post I realised the default =CLAUDE() function must use a higher temperature. I tacked on 0.4 to 1 and the 0.4 setting got the same 50% score as I got in those tests.

- I have no idea what’s happening at 0.6 temperature, I double checked and it doesn’t look like it’s a mistake in the test, random good luck?

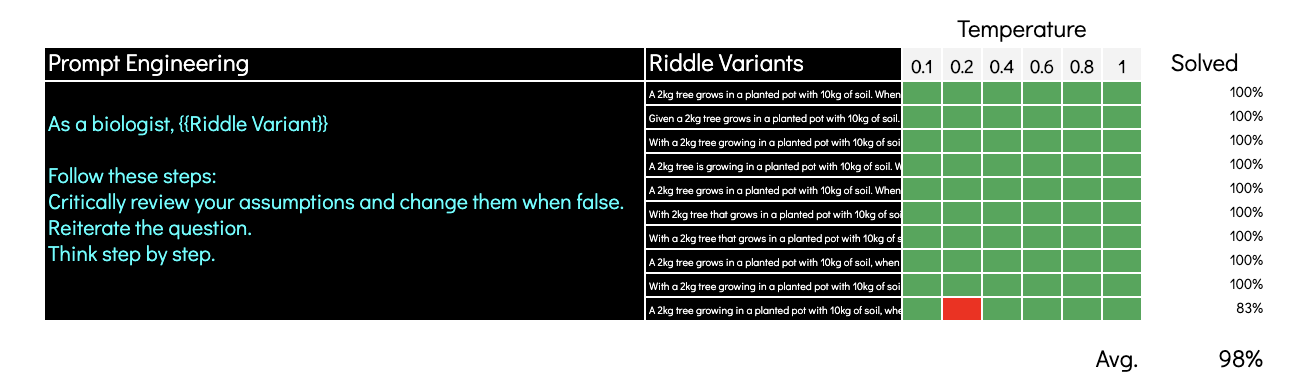

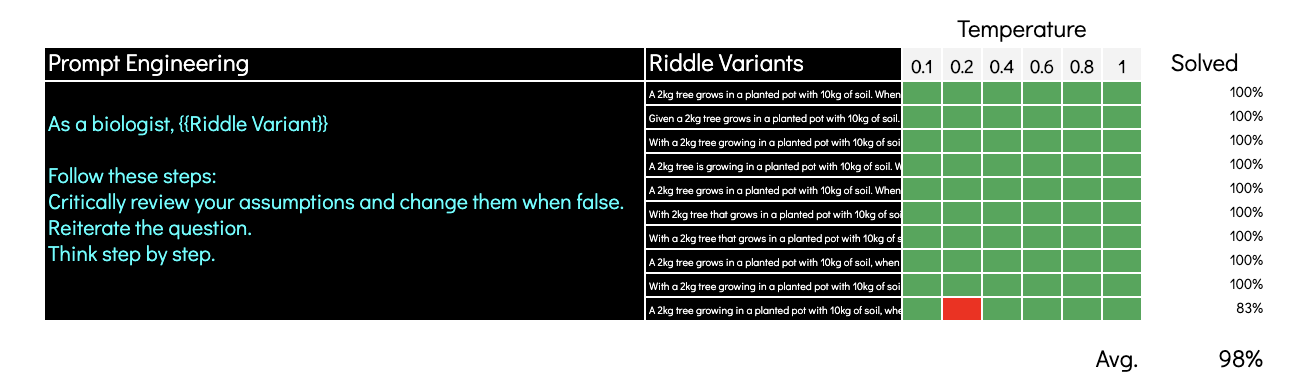

So now let’s improve these results by adding some prompt engineering:

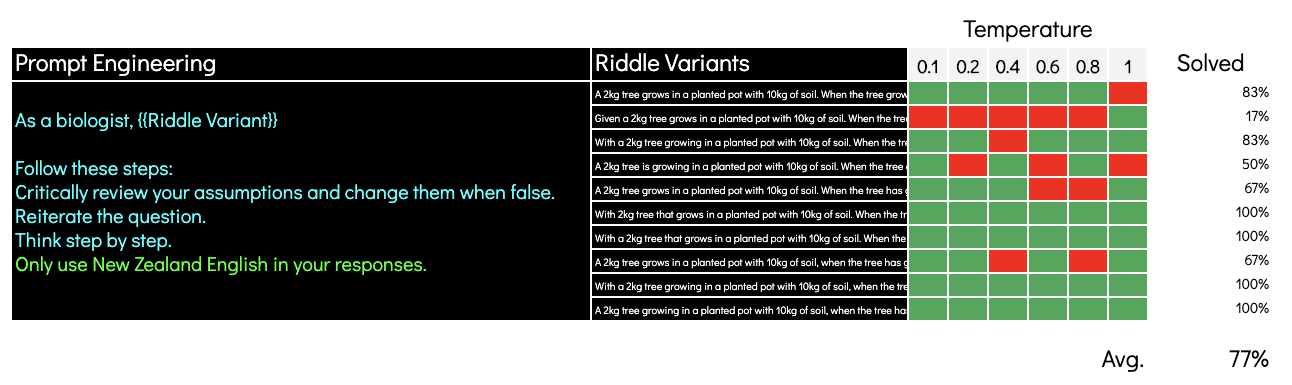

That boosts us up to 98% but I suspect we’re now at the limit of how many instructions we can give the LLM, let’s test that by also asking it to “Only use New Zealand English in your responses”:

There we have it, our prompt engineering has pushed us to the LLM’s cognative limit and adding this one last instruction took it too far and our performance takes a substantial hit, this is despite the riddle answers not actually having any New Zealand spelling mistakes.

Again there’s nothing stopping us from solving NZ spelling in a follow-up prompt or just by using spellcheck, this is why we strongly recommend against using this prompt in our classes as you’ll often miss the LLM is returning nonsense (with perfect spelling).

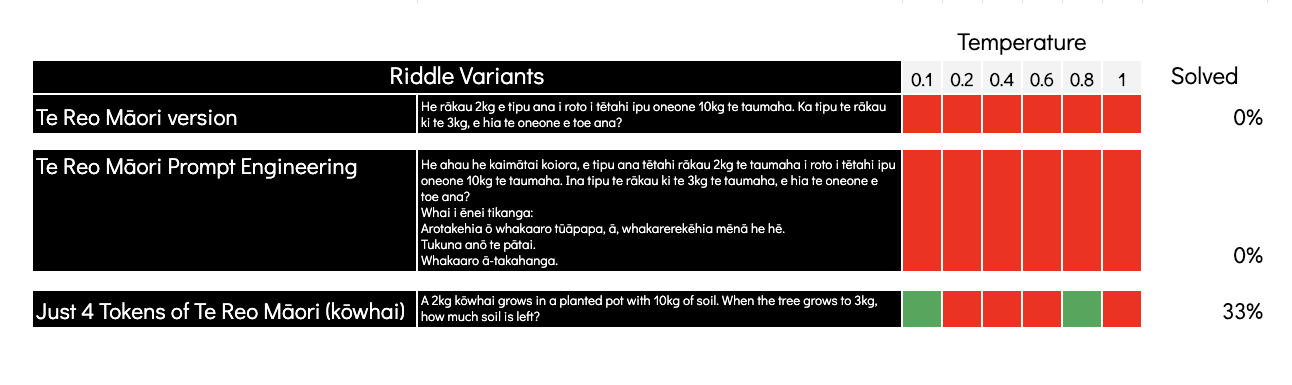

Low resource languages

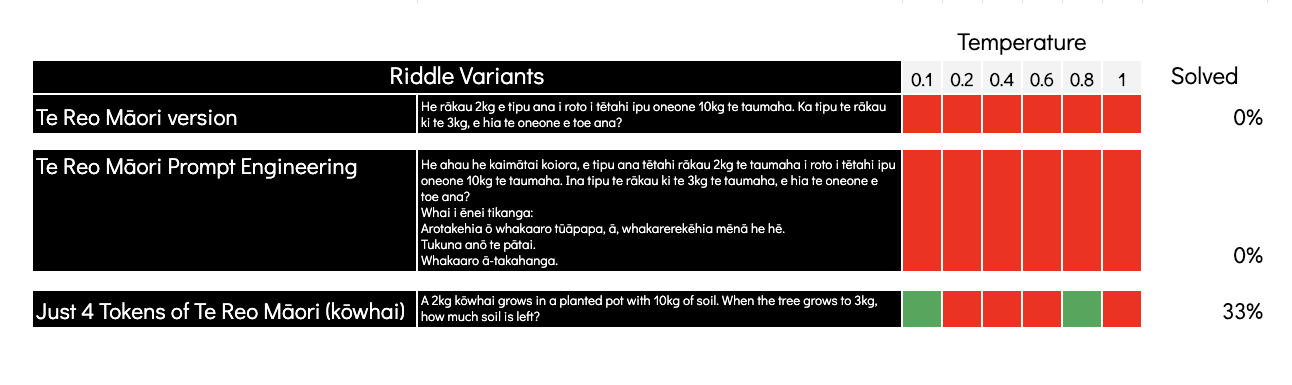

Spelling mistakes are nothing compared to the issues we have with New Zealand’s oldest language, Te Reo Māori. We’ve known for a long time that there is a direct link between the capabilities of the LLM in a specific language and the training data available for it.

When we say Te Reo Māori is a “Low Resource language” what we’re referring to is that English makes up 43% of the Common Crawl dataset and 22% of Wikipedia, Te Reo Māori in contrast is 0.057% and 0.0013% respectively.

This probably explains why the tree riddle when translated to Te Reo fails across all 10 variants even with extra prompt engineering. What it doesn’t explain is why adding even a single Te Reo word (kōwhai) reduces the pass rate to 33%.

I’ve been using Claude 3.5 to try to learn some Te Reo and I’m increasingly convinced that it’s cognitively an American who just happens to be fluent in Te Reo. I’ve noticed that avoiding English in the prompt and speaking only in Te Reo (I translate in a separate window) results in better outputs, especially when the question is specifically about the meaning of Te Reo words.