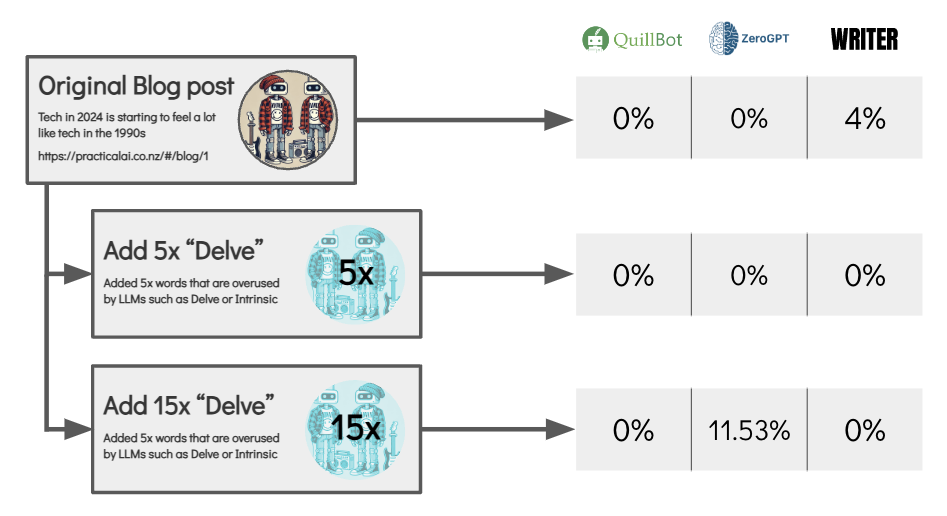

I actually think we’ve already passed peak AI-slop, the period of time where low quality AI-generated content could be passed off as human made and sucessfully monetized was very short and hopefully already finished.

Companies are also learning that just tacking the letters AI onto your product isn’t an AI strategy, in fact it’s driving consumers away and any attempts to hide AI cost cutting risking public shaming on reddit. What’s overlooked with AI-Slop is that it doesn’t add anything novel, AI’s strength is intelligence not cheap content generation, we already had Fiverr and content farms long before ChatGPT and LLMs.

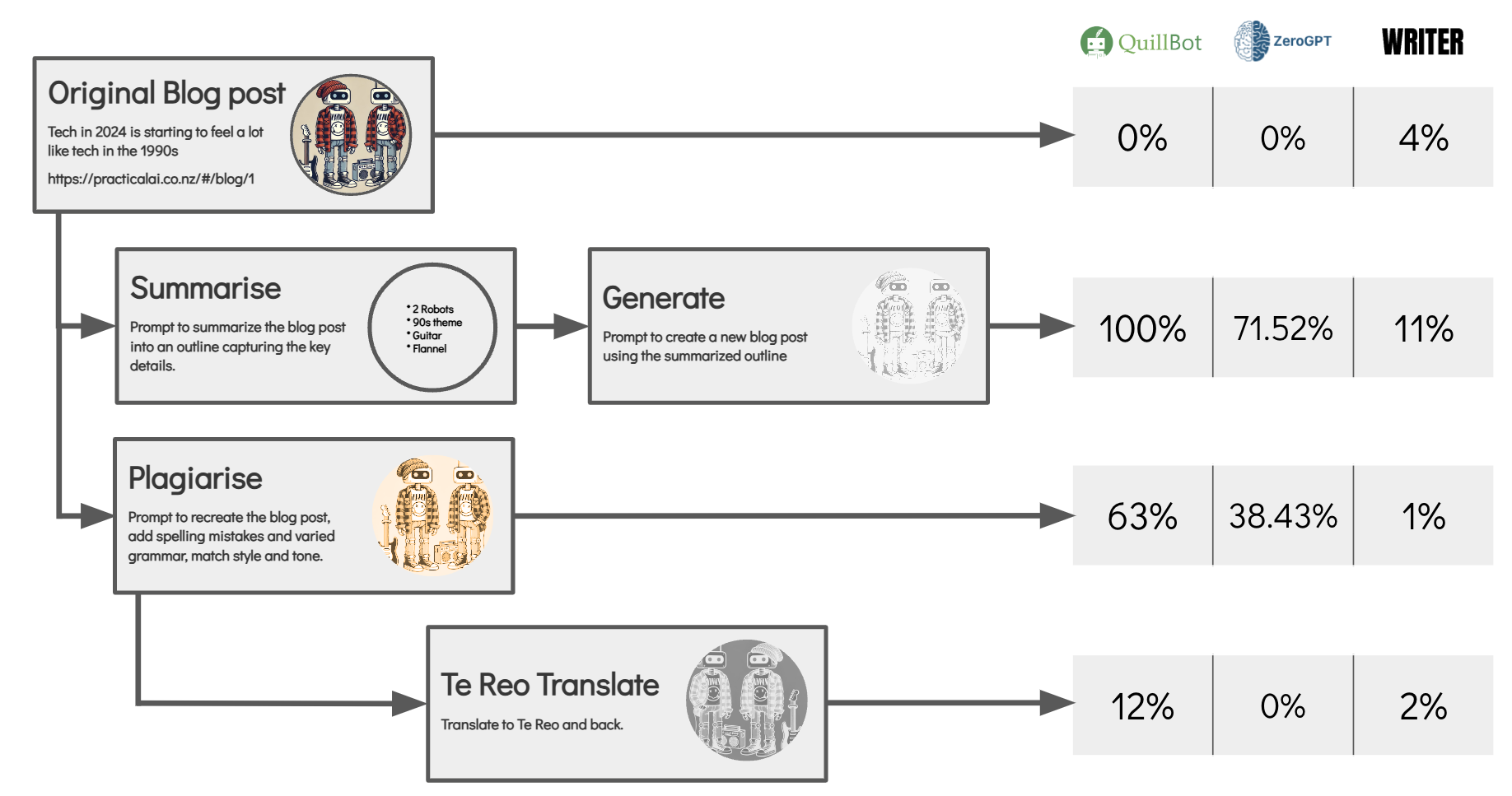

Similar to the concerns around job market disruption AI doesn’t so much introduce a problem as it exacerbates an existing one, usually one we’ve been in denial about. Much of the focus on detecting AI-generated content is coming from Education but as any hiring manager or parent will tell you there was already a disconnect between student’s grades and their actual knowledge and skills.

Likewise with plagiarism and the problem of low quality content in general, these aren’t new problems. There isn’t a recipe on the internet that was improved by the author’s rambling life story nor should we pretend AI invented the “Big-4 intern” style of writing it’s now mimicking. The problems were already here, AI has made them worse and the only thing that’s changed is that we can’t ignore them any longer.