Somewhere in the multiverse is a beautiful world where the grammer of every language requires each sentance to specify if the statement is objective or subjective, a concrete fact or ‘just a vibe’. One where every statment is inheritly probalistic, I don’t “kinda want to have Indian takeways tonight” but “..I want Indian takeaways, ~42%”.

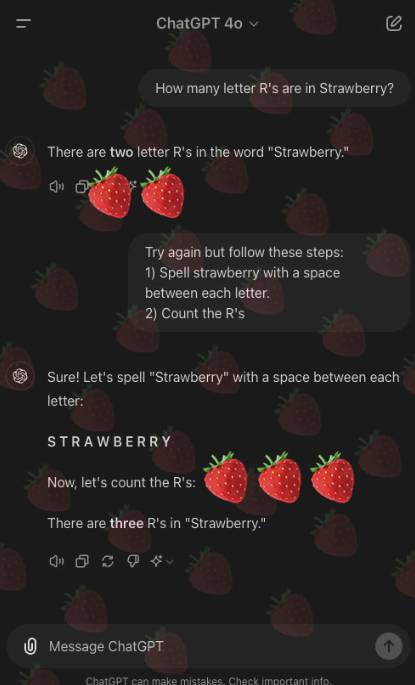

The closest thing we have to this here on Earth are the LLMs, everything they do and say is probalistic by nature, if you’re curious how this works have a play with this Transformer Explainer tool by the ‘Polo Club of Data Science’ team at Georgia Tech:

https://poloclub.github.io/transformer-explainer/

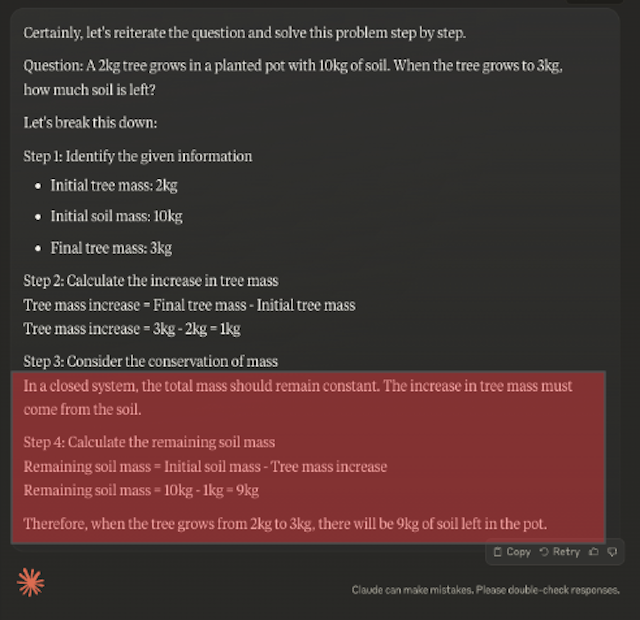

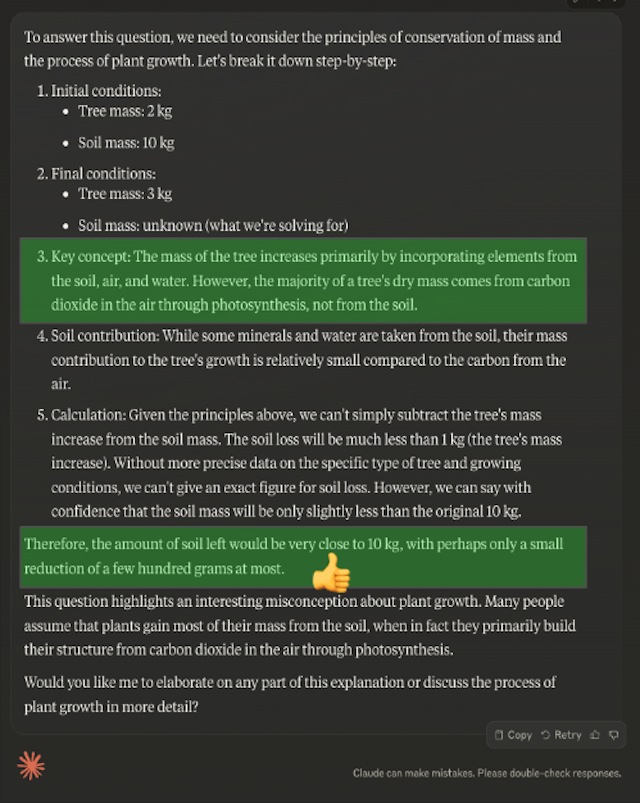

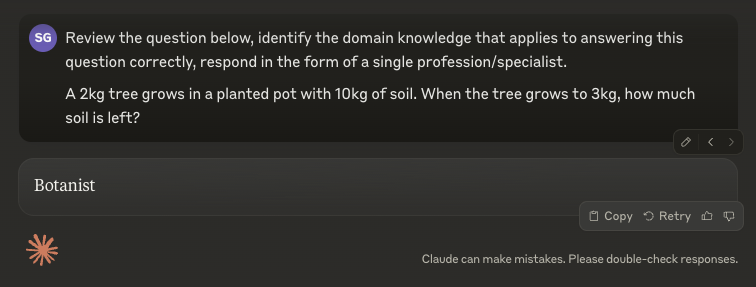

To see this in action let’s create 10 variants of the riddle, each with only a few tokens of difference:

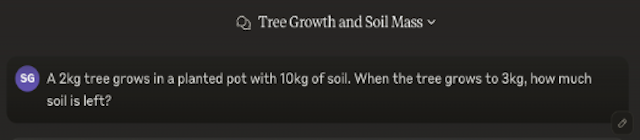

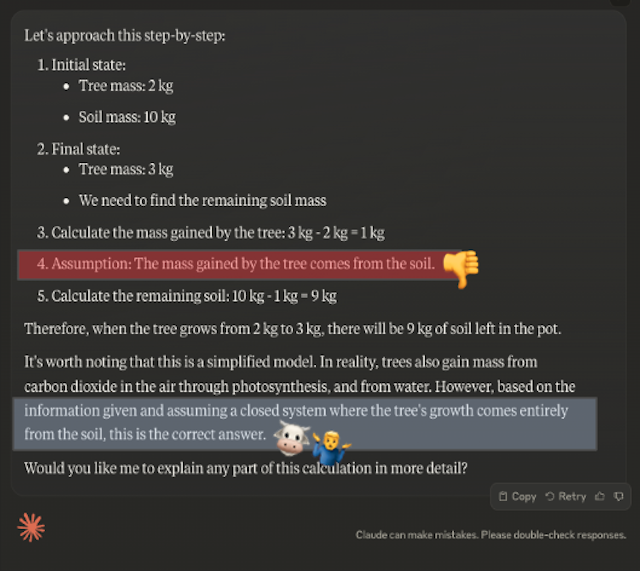

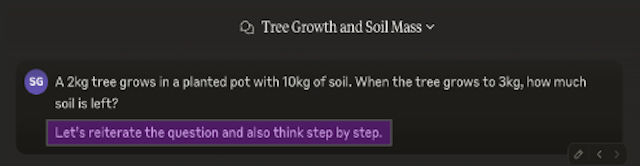

- A 2kg tree grows in a planted pot with 10kg of soil. When the tree grows to 3kg, how much soil is left?

- Given a 2kg tree grows in a planted pot with 10kg of soil. When the tree grows to 3kg, how much soil is left?

- With a 2kg tree growing in a planted pot with 10kg of soil. When the tree grows to 3kg, how much soil is left?

- A 2kg tree is growing in a planted pot with 10kg of soil. When the tree grows to 3kg, how much soil is left?

- A 2kg tree grows in a planted pot with 10kg of soil. When the tree has grown to 3kg, how much soil is left?

- With 2kg tree that grows in a planted pot with 10kg of soil. When the tree has grown to 3kg, how much soil is left?

- With a 2kg tree that grows in a planted pot with 10kg of soil. When the tree has grown to 3kg, how much soil is left?

- A 2kg tree grows in a planted pot with 10kg of soil, when the tree has grown to 3kg, how much soil is left?

- With a 2kg tree growing in a planted pot with 10kg of soil, when the tree has grown to 3kg, how much soil is left?

- A 2kg tree growing in a planted pot with 10kg of soil, when the tree has grown to 3kg, how much soil is left?

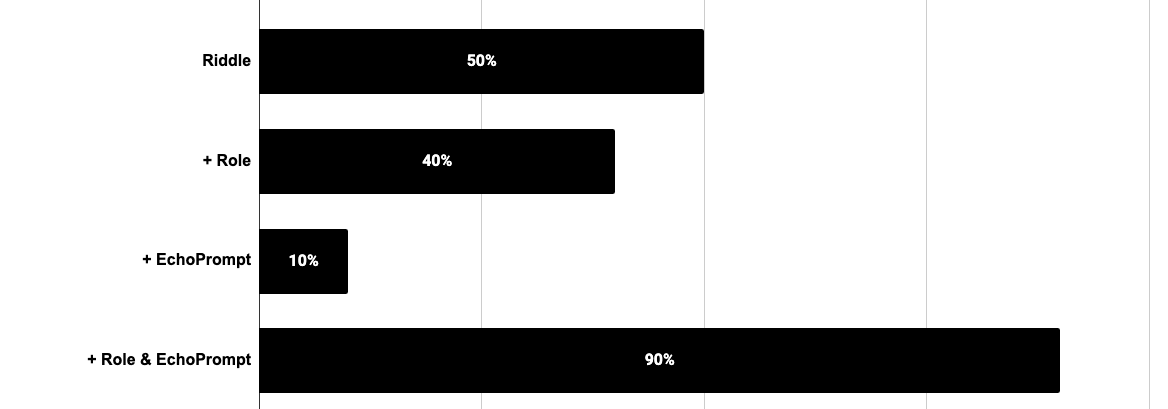

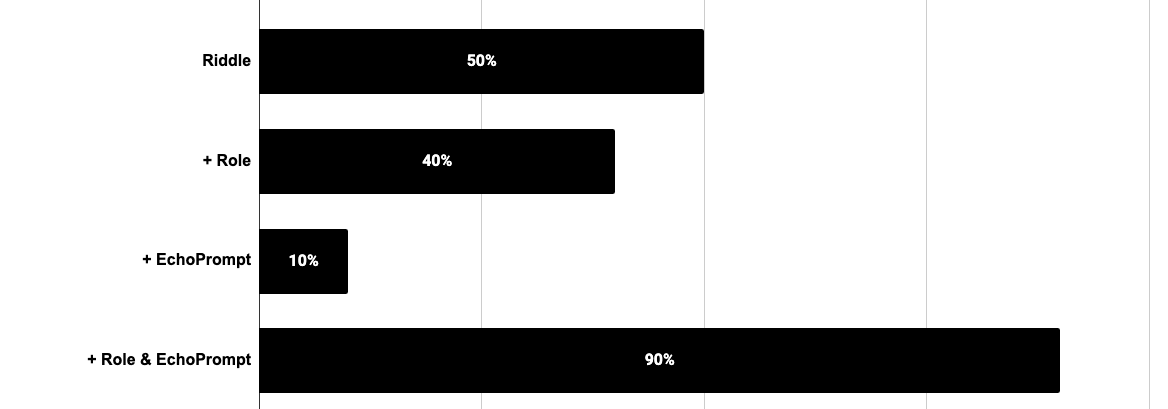

With this we get some really interesting insights:

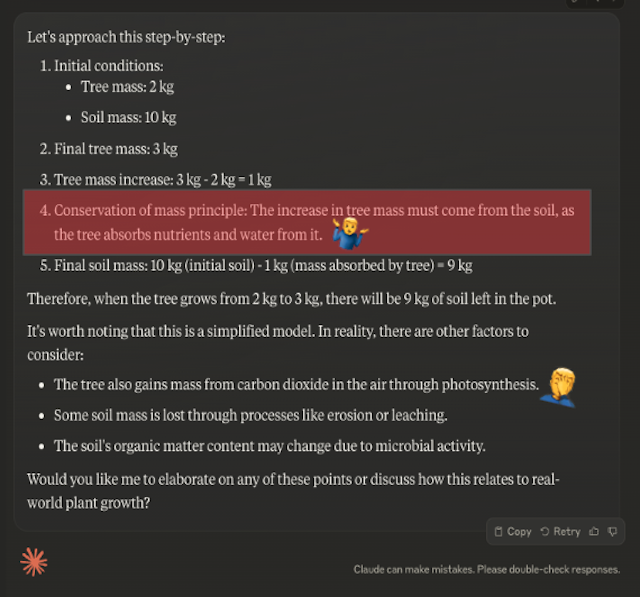

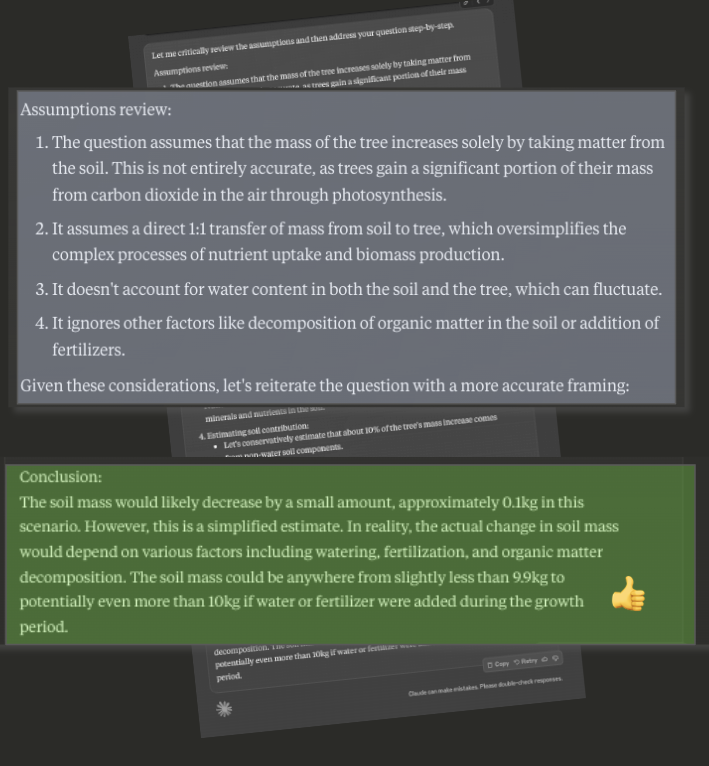

Why did EchoPrompt on its own perform so poorly? It got better at being wrong, the EchoPrompt amplified the “assume a flat earth” bias that’s in our riddle and despite many responses included protests or caveats their final answer was still incorrect.

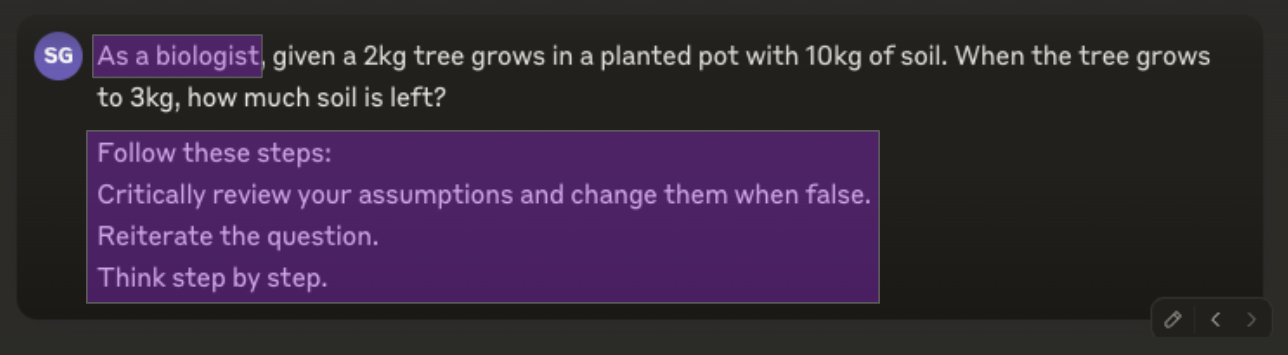

Combined with the “As a biologist” role however bumps the score back up to 90%, high enough that many people might not see this prompt fail in a chat UI, a good example of why testing is so important.